Discover and explore top open-source AI tools and projects—updated daily.

rag by  neuml

neuml

Streamlit app for Retrieval Augmented Generation (RAG) with txtai

Top 67.5% on SourcePulse

This project provides a Streamlit application for Retrieval Augmented Generation (RAG), enabling users to combine search and Large Language Models (LLMs) for data-driven insights. It supports both traditional Vector RAG and a novel Graph RAG approach, making it suitable for researchers and developers looking to enhance LLM accuracy with custom data.

How It Works

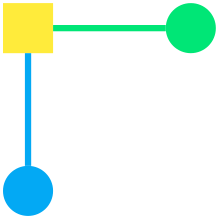

The application leverages the txtai library to implement RAG. Vector RAG uses vector search to retrieve the most relevant documents, which are then fed into an LLM prompt. Graph RAG extends this by incorporating knowledge graphs, allowing context generation through graph path traversals. This graph-based approach can improve factual accuracy and provide richer context by exploring relationships between concepts.

Quick Start & Requirements

- Docker:

docker run -d --gpus=all -it -p 8501:8501 neuml/rag - Python:

pip install -r requirements.txtfollowed bystreamlit run rag.py - Prerequisites: GPU with CUDA support is recommended for LLM inference. Specific LLMs (e.g., Llama 3.1 AWQ) may have architecture-specific requirements (x86-64). Ollama can be used for local LLM serving.

- Configuration: Environment variables control LLM, embeddings, and data indexing. See documentation.

Highlighted Details

- Supports two RAG methods: Vector RAG and Graph RAG (including graph query expansion and path traversal).

- Allows adding data via file paths, URLs, or direct text input using the

#prefix. - Configurable via environment variables for LLM choice (e.g., Llama 3.1, GPT-4o), embeddings, and persistence.

- Includes example configurations for Docker, Ollama, and custom data indexing.

Maintenance & Community

The project is actively developed by the neuml organization. Further details on community and roadmap are not explicitly provided in the README.

Licensing & Compatibility

The project's licensing is not explicitly stated in the README. Compatibility for commercial use or closed-source linking would require clarification.

Limitations & Caveats

AWQ models are restricted to x86-64 architectures. The README does not detail specific performance benchmarks or known limitations of the Graph RAG implementation.

2 months ago

1 day

Emissary-Tech

Emissary-Tech sarthakrastogi

sarthakrastogi AnkitNayak-eth

AnkitNayak-eth LHRLAB

LHRLAB redis-developer

redis-developer win4r

win4r pguso

pguso parthsarthi03

parthsarthi03 yanqiangmiffy

yanqiangmiffy bragai

bragai typesense

typesense HKUDS

HKUDS