Discover and explore top open-source AI tools and projects—updated daily.

rag-research-agent-template by  langchain-ai

langchain-ai

RAG research agent template

Top 90.9% on SourcePulse

This project provides a starter template for building a Retrieval Augmented Generation (RAG) research agent using LangGraph. It's designed for developers looking to create sophisticated LLM-powered applications that can research topics by retrieving and synthesizing information from documents. The template offers a flexible and modular architecture, allowing for customization of data sources, language models, and retrieval strategies.

How It Works

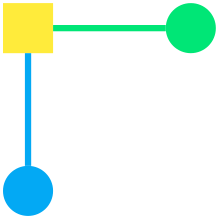

The project utilizes LangGraph to orchestrate three main components: an index graph for document ingestion and indexing, a retrieval graph for managing conversations and routing queries, and a researcher subgraph for executing research plans. When a query related to "LangChain" is detected, the retrieval graph generates a research plan, which is then processed by the researcher subgraph. The researcher parallelizes document retrieval for each step of the plan, returning relevant documents to the retrieval graph to formulate a comprehensive response. This approach enables efficient and targeted information gathering for complex research tasks.

Quick Start & Requirements

- Setup:

- Clone the repository.

- Create a

.envfile from.env.exampleand configure API keys and connection details for your chosen retriever (Elasticsearch, MongoDB Atlas, Pinecone) and language models (Anthropic, OpenAI).

- Indexing: Use LangGraph Studio to invoke the "indexer" graph, optionally providing documents or using the default sample documents.

- Research: Switch to the "retrieval_graph" in LangGraph Studio and query the agent about LangChain topics.

- Prerequisites: LangGraph Studio, Python, Docker (for local Elasticsearch). API keys for chosen LLM and vector database providers.

- Documentation: LangGraph

Highlighted Details

- Supports multiple vector databases: Elasticsearch, MongoDB Atlas, and Pinecone.

- Allows customization of LLMs for response and query generation, with support for Anthropic and OpenAI models.

- Offers flexibility in choosing embedding models, with OpenAI and Cohere as options.

- Enables fine-tuning of retrieval parameters and customization of prompts for tailored agent behavior.

Maintenance & Community

The project is associated with the LangGraph ecosystem. Further community engagement and support can likely be found through LangGraph's official channels.

Licensing & Compatibility

The repository's license is not explicitly stated in the provided README. Users should verify licensing terms for any commercial use or integration with closed-source projects.

Limitations & Caveats

The README mentions that documentation for LangGraph is "under construction." Some components or features might be subject to change as the underlying libraries evolve. The agent's effectiveness is dependent on the quality of the indexed documents and the configuration of the LLMs and retrieval parameters.

1 year ago

Inactive

neuml

neuml leettools-dev

leettools-dev SciPhi-AI

SciPhi-AI redis

redis felladrin

felladrin 666ghj

666ghj paulpierre

paulpierre GiovanniPasq

GiovanniPasq rashadphz

rashadphz microsoft

microsoft tobi

tobi Cinnamon

Cinnamon