Discover and explore top open-source AI tools and projects—updated daily.

whisper_dictation by  themanyone

themanyone

Voice keyboard for local AI chat, image gen, webcam, & voice control

Top 91.2% on SourcePulse

This project provides a private, voice-controlled interface for interacting with a computer, integrating speech-to-text, AI chat, image generation, and system control. It targets users seeking a hands-free, AI-powered computing experience, akin to a "ship's computer," enabling tasks like dictation, web searches, and application launching via voice commands.

How It Works

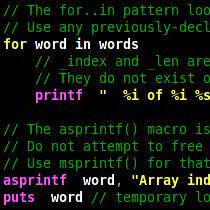

The system leverages whisper.cpp for efficient, local speech-to-text and translation, minimizing external dependencies. Voice commands are parsed to trigger actions using pyautogui for system control and application launching. It can optionally integrate with local LLMs (like llama.cpp) or cloud services (OpenAI, Gemini) for AI chat and text-to-speech via mimic3 or piper, and local Stable Diffusion for image generation.

Quick Start & Requirements

- Install GStreamer and

ladspa-delay-so-delay-5s(viagstreamer1-plugins-bad-free-extras). - Install Python dependencies:

pip install -r whisper_dictation/requirements.txt. - Build

whisper.cppwith CUDA support:GGML_CUDA=1 make -j. - Run

whisper.cppserver:./whisper_cpp_server -l en -m models/ggml-tiny.en.bin --port 7777. - Requires >= 4 GiB VRAM for full functionality, especially with LLMs and image generation.

Highlighted Details

- Reduced dependencies by eliminating

torch,pycuda,cudnn, andffmpeg. - Stable Diffusion can run with as little as 2 GiB VRAM using

--medvramor--lowvramflags. - Supports local LLMs via

llama.cppand optional cloud APIs (OpenAI, Gemini). - Enables voice-controlled webcam, audio recording, and application launching.

Maintenance & Community

- Developed by Henry Kroll III (themanyone).

- Links to GitHub, YouTube, Mastodon, LinkedIn, and a "Buy Me a Coffee" page are provided.

- Mentions

mimic3may be abandoned in favor ofpiper.

Licensing & Compatibility

- Licensed under MIT.

- Permissive license allows for individual modification and use, suitable for commercial applications.

Limitations & Caveats

mimic3is noted as potentially abandoned, withpipersuggested as a replacement.- High VRAM usage can occur with large models and context windows, potentially leading to crashes.

- Performance may vary based on hardware, especially for LLM inference and image generation.

1 month ago

1 day

infiniV

infiniV woheller69

woheller69 GRVYDEV

GRVYDEV Adri6336

Adri6336 cjyaddone

cjyaddone lhl

lhl zhangliwei7758

zhangliwei7758 uezo

uezo rishikanthc

rishikanthc TOM88812

TOM88812 cogentapps

cogentapps QuentinFuxa

QuentinFuxa