Discover and explore top open-source AI tools and projects—updated daily.

Awesome-state-space-models by  radarFudan

radarFudan

Collection of papers on state-space models

Top 53.4% on SourcePulse

This repository is a curated collection of research papers and code related to State-Space Models (SSMs), serving as a comprehensive resource for researchers and practitioners exploring alternatives to Transformers for sequence modeling. It highlights advancements in SSM architectures, theoretical analyses, and applications across various domains like language, vision, and reinforcement learning.

How It Works

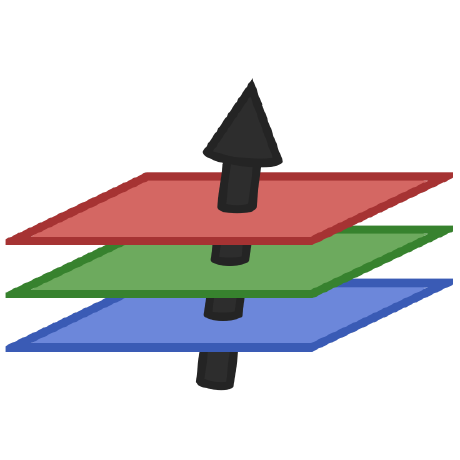

The collection showcases how SSMs, particularly variants like Mamba, aim to overcome the quadratic complexity of Transformers by leveraging efficient recurrence relations and selective state updates. Key innovations include input-dependent gating mechanisms, hardware-aware parallelization, and novel parameterization techniques to improve stability and performance on long sequences. This approach offers a compelling trade-off between computational efficiency and modeling power.

Quick Start & Requirements

This is a collection of papers and links, not a runnable library. To explore specific implementations, users should refer to the linked GitHub repositories for each paper. Requirements will vary per project but generally include Python and deep learning frameworks like PyTorch or JAX.

Highlighted Details

- Mamba Architecture: Features papers detailing Mamba, a selective SSM that achieves linear time complexity with input-dependent gating.

- Broad Applications: Covers SSM applications in language modeling, computer vision (VMamba, U-Mamba), reinforcement learning, and time series forecasting.

- Theoretical Foundations: Includes research on generalization error analysis, stability, parameterization, and the theoretical expressivity of SSMs.

- Transformer Comparisons: Presents studies that directly compare SSM performance against Transformers, often highlighting competitive or superior results on long-sequence tasks.

Maintenance & Community

The repository is maintained by radarFudan and appears to be actively updated with recent publications in the field. Links to GitHub repositories for many papers are provided, facilitating community engagement with specific implementations.

Licensing & Compatibility

The repository itself is a collection of links and does not have a specific license. Individual linked repositories will have their own licenses, which should be checked for compatibility with commercial or closed-source use.

Limitations & Caveats

This resource is a bibliography and code index, not a unified framework. Users must navigate individual paper repositories for setup, dependencies, and specific usage instructions. The rapid evolution of the field means some linked papers or code might become outdated.

3 months ago

1 day

zhengzangw

zhengzangw google-deepmind

google-deepmind AvivBick

AvivBick Event-AHU

Event-AHU XiudingCai

XiudingCai IbrahimSobh

IbrahimSobh seal-rg

seal-rg test-time-training

test-time-training Separius

Separius kebijuelun

kebijuelun FluxML

FluxML HandsOnLLM

HandsOnLLM