Discover and explore top open-source AI tools and projects—updated daily.

StarGANv2-VC by  yl4579

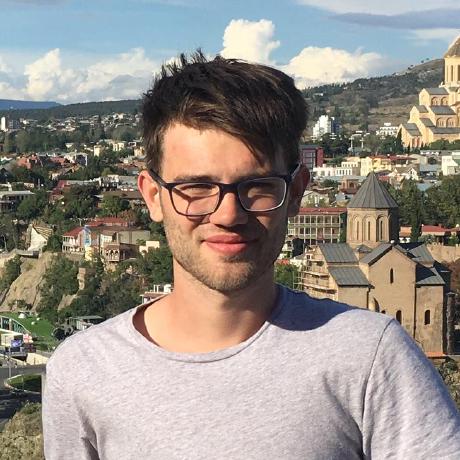

yl4579

Voice conversion research paper using StarGAN v2

Top 60.7% on SourcePulse

This repository provides StarGANv2-VC, an unsupervised, non-parallel framework for diverse voice conversion. It enables many-to-many voice conversion, cross-lingual conversion, and stylistic speech conversion (e.g., emotional, falsetto) without requiring paired data or text labels. The target audience includes researchers and developers working on speech synthesis and voice manipulation.

How It Works

StarGANv2-VC leverages a generative adversarial network (GAN) architecture. It employs an adversarial source classifier loss and a perceptual loss to achieve natural-sounding voice conversion. A key component is the style encoder, which allows for the conversion of plain speech into various styles, enhancing the model's versatility. This approach enables high-quality conversion that rivals state-of-the-art text-to-speech (TTS) systems, even in real-time with compatible vocoders.

Quick Start & Requirements

- Install:

pip install SoundFile torchaudio munch parallel_wavegan pydub pyyaml click librosa - Prerequisites: Python >= 3.7, VCTK dataset (downsampled to 24 kHz). Pretrained ASR and F0 models are provided but may require retraining for non-English or non-speech data.

- Setup: Clone the repository, prepare the VCTK dataset, and configure

config.yml. - Links: Paper: https://arxiv.org/abs/2107.10394, Audio samples: https://starganv2-vc.github.io/

Highlighted Details

- Awarded INTERSPEECH 2021 Best Paper Award.

- Achieves natural-sounding voices comparable to TTS-based methods.

- Supports real-time voice conversion with vocoders like Parallel WaveGAN.

- Generalizes to any-to-many, cross-lingual, and singing conversion tasks.

Maintenance & Community

The project is associated with Yinghao Aaron Li and Nima Mesgarani. Further details on community or roadmap are not explicitly provided in the README.

Licensing & Compatibility

The repository's licensing is not explicitly stated in the README. Compatibility for commercial use or closed-source linking would require clarification.

Limitations & Caveats

While the provided ASR model works for other languages, retraining custom ASR and F0 models is recommended for optimal performance on non-English or non-speech data. The README notes that batch_size = 5 requires approximately 10GB of GPU RAM, indicating a significant hardware requirement for training.

1 year ago

Inactive

SpeechifyInc

SpeechifyInc Rongjiehuang

Rongjiehuang deterministic-algorithms-lab

deterministic-algorithms-lab PlayVoice

PlayVoice Tomiinek

Tomiinek boson-ai

boson-ai Plachtaa

Plachtaa myshell-ai

myshell-ai svc-develop-team

svc-develop-team CorentinJ

CorentinJ