Discover and explore top open-source AI tools and projects—updated daily.

Comprehensive-Transformer-TTS by  keonlee9420

keonlee9420

PyTorch toolkit for non-autoregressive transformer text-to-speech (TTS)

Top 83.6% on SourcePulse

This repository provides a comprehensive PyTorch implementation of non-autoregressive Transformer-based Text-to-Speech (TTS) systems. It supports various state-of-the-art Transformer architectures and offers both supervised and unsupervised duration modeling, aiming to advance TTS research and quality. The project is suitable for researchers and developers looking to experiment with and build advanced TTS models.

How It Works

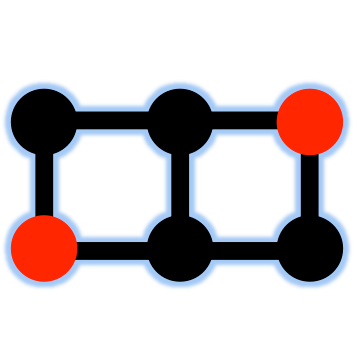

The core of the system is a non-autoregressive Transformer encoder-decoder architecture. It allows for flexible integration of different Transformer variants (e.g., Fastformer, Conformer, Reformer) as building blocks. Duration modeling is a key component, with options for supervised methods (like FastSpeech 2) and unsupervised methods that eliminate the need for external alignment tools. Prosody modeling is also supported, enabling control over pitch, volume, and speaking rate.

Quick Start & Requirements

- Install dependencies:

pip3 install -r requirements.txt - Dockerfile is provided.

- Inference requires downloading pre-trained models and placing them in

output/ckpt/DATASET/. - Run inference:

python3 synthesize.py --text "YOUR_DESIRED_TEXT" --restore_step RESTORE_STEP --mode single --dataset DATASET - Training:

python3 train.py --dataset DATASET - Supports LJSpeech and VCTK datasets; custom datasets can be adapted.

- Forced alignment can be done using Montreal Forced Aligner (MFA) or by using provided pre-extracted alignments.

- Official documentation for dataset adaptation is available.

Highlighted Details

- Supports multiple Transformer architectures: Fastformer, Long-Short Transformer, Conformer, Reformer, and standard Transformer.

- Offers both supervised and unsupervised duration modeling, with unsupervised methods freeing users from external aligners.

- Includes prosody modeling for controlling pitch, volume, and speaking rate.

- Benchmarks show competitive memory usage and training times across different Transformer blocks on a TITAN RTX.

- Supports single-speaker and multi-speaker TTS, with options for speaker embedding using pre-trained DeepSpeaker models.

Maintenance & Community

The project has seen updates, including codebase fixes, pre-trained model updates, and the addition of new prosody modeling methods. It references and builds upon several prominent open-source TTS and Transformer projects.

Licensing & Compatibility

The repository's license is not explicitly stated in the provided README text. Compatibility for commercial use or closed-source linking would require clarification of the licensing terms.

Limitations & Caveats

The README mentions that unsupervised duration modeling at the phoneme level can take longer due to runtime computation. Some prosody conditioning methods (e.g., CWT) may offer more expressiveness but potentially less pitch stability compared to frame-level or phoneme-level conditioning.

3 years ago

Inactive

EmulationAI

EmulationAI p0p4k

p0p4k NATSpeech

NATSpeech lucidrains

lucidrains wenet-e2e

wenet-e2e spring-media

spring-media bytedance

bytedance ming024

ming024 open-mmlab

open-mmlab Zyphra

Zyphra espnet

espnet