Discover and explore top open-source AI tools and projects—updated daily.

summarize-from-feedback by  openai

openai

Code and data for summarization research

Top 35.4% on SourcePulse

This repository provides code for training and evaluating summarization models using human feedback, targeting researchers and developers in NLP. It enables the replication of OpenAI's "Learning to Summarize from Human Feedback" paper, offering a supervised baseline, a reward model, and an RL fine-tuned policy.

How It Works

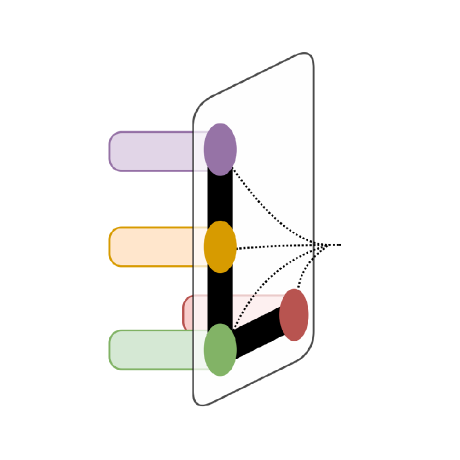

The project implements a reinforcement learning approach where a summarization model is fine-tuned using a reward model trained on human preferences. This reward model learns to score summaries based on pairwise comparisons provided by human annotators, guiding the RL policy to generate summaries that align with human judgment. This method aims to improve summary quality beyond standard supervised learning.

Quick Start & Requirements

- Install using

pipenv install. - Requires Python 3.7 64-bit on Ubuntu 18.04 and an Nvidia GPU.

- Run tests with

pipenv run exps/sample.py test test-sample. - Official dataset download:

azcopy copy "https://openaipublic.blob.core.windows.net/summarize-from-feedback/dataset/*" . --recursive. - Filtered TL;DR dataset: https://openaipublic.blob.core.windows.net/summarize-from-feedback/datasets/tldr_3_filtered/

Highlighted Details

- Released human feedback dataset with 64,832 summary comparisons.

- Includes code for supervised baseline, reward model, and RL policy.

- Supports evaluation on TL;DR and CNN/DM datasets.

- Provides filtered versions of the TL;DR dataset.

Maintenance & Community

- Status: Archive (code is provided as-is, no updates expected).

Licensing & Compatibility

- Original TL;DR dataset is licensed under CC BY 4.0.

- No explicit license is mentioned for the code itself.

Limitations & Caveats

The project is archived and will not receive updates. It has specific platform requirements (Ubuntu 18.04, Python 3.7) and requires an Nvidia GPU, potentially limiting broader adoption.

2 years ago

Inactive

MLNLP-World

MLNLP-World SteveKGYang

SteveKGYang Yale-LILY

Yale-LILY nlpyang

nlpyang uoneway

uoneway onejune2018

onejune2018 IndicoDataSolutions

IndicoDataSolutions huggingface

huggingface graviraja

graviraja lmmlzn

lmmlzn icoxfog417

icoxfog417 RedditSota

RedditSota