Discover and explore top open-source AI tools and projects—updated daily.

SummEval by  Yale-LILY

Yale-LILY

Evaluation toolkit for summarization models

Top 71.2% on SourcePulse

This repository provides resources for the "SummEval: Re-evaluating Summarization Evaluation" paper, offering a comprehensive dataset of model-generated summaries and human annotations for evaluating summarization systems. It targets researchers and practitioners in Natural Language Processing (NLP) who need robust tools and data for assessing summarization quality.

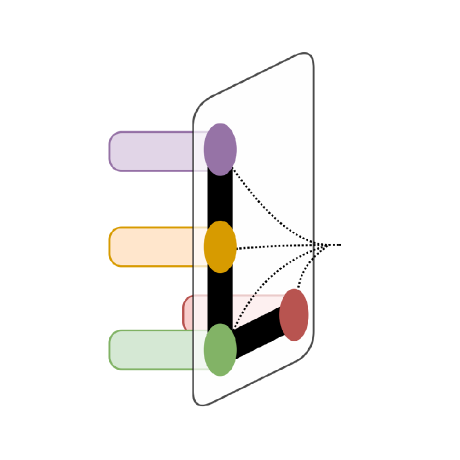

How It Works

The project provides pre-computed outputs from 23 state-of-the-art summarization models, including both extractive and abstractive approaches, alongside human annotations across four dimensions: coherence, consistency, fluency, and relevance. It also includes an evaluation toolkit that unifies popular and novel metrics like ROUGE, MoverScore, BertScore, and BLANC, enabling standardized and reproducible evaluation of summarization models.

Quick Start & Requirements

- Install:

pip install summ-eval - Prerequisites: Python 3.x. Data pairing requires downloading CNN/DailyMail articles and running

data_processing/pair_data.py. - Resources: Model outputs and human annotations are available for download.

- Docs: https://github.com/Yale-LILY/SummEval

Highlighted Details

- Includes outputs from 23 diverse summarization models, covering both extractive and abstractive methods.

- Features human annotations from crowdsourced workers and experts on 1600 summaries.

- Evaluation toolkit supports metrics such as ROUGE, MoverScore, BertScore, BLANC, and SUPERT.

- Provides a command-line interface (

calc-scores) and Python API for easy integration.

Maintenance & Community

This project is a collaboration between Yale LILY Lab and Salesforce Research. Issues and Pull Requests are welcome via GitHub.

Licensing & Compatibility

The repository's license is not explicitly stated in the README. Model outputs are shared with author consent and require citing original papers.

Limitations & Caveats

The README does not specify a license, which may impact commercial use. The data pairing process requires manual downloading of external datasets.

1 year ago

Inactive

nlpyang

nlpyang allenai

allenai uoneway

uoneway aws

aws mlabonne

mlabonne RUCAIBox

RUCAIBox xcfcode

xcfcode openai

openai opendatalab

opendatalab icoxfog417

icoxfog417 huggingface

huggingface fighting41love

fighting41love