Discover and explore top open-source AI tools and projects—updated daily.

Langchain-Chatchat by  chatchat-space

chatchat-space

RAG and agent app for local knowledge-based LLMs

Top 0.9% on SourcePulse

Langchain-Chatchat provides an open-source, offline-deployable RAG and Agent application framework for Chinese language scenarios. It empowers users to build local knowledge-based question-answering systems using various open-source Large Language Models (LLMs) like ChatGLM, Qwen, and Llama, with Langchain as the core framework.

How It Works

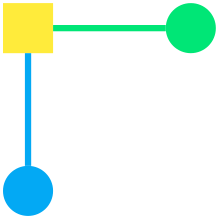

The project implements a Retrieval-Augmented Generation (RAG) pipeline. It involves loading and chunking documents, vectorizing text and queries, retrieving the top-k most similar text chunks, and feeding these as context along with the query to an LLM for response generation. The 0.3.x version significantly enhances Agent capabilities and supports diverse tools, including search engines, databases, and multimodal inputs.

Quick Start & Requirements

- Installation:

pip install langchain-chatchat -U(orpip install "langchain-chatchat[xinference]" -Ufor Xinference). - Prerequisites: Python 3.8-3.11, tested on Windows, macOS, Linux. Supports CPU, GPU, NPU, MPS hardware. Requires separate deployment of LLM inference frameworks (e.g., Xinference, Ollama, LocalAI, FastChat) and loading of models.

- Setup: Requires configuring model inference frameworks and initializing the project with

chatchat initandchatchat kb -r. - Documentation: Xinference Docs, Ollama Docs, LocalAI Docs, FastChat Docs.

Highlighted Details

- Supports a wide array of open-source LLMs, Embedding models, and vector databases for fully offline private deployment.

- Version 0.3.x introduces enhanced Agent capabilities, database interaction, multimodal chat (e.g., Qwen-VL-Chat), and tools like Wolfram Alpha.

- Integrates with multiple model serving frameworks (Xinference, Ollama, LocalAI, FastChat) and supports OpenAI API compatibility.

- Offers both FastAPI-based APIs and a Streamlit-based WebUI for interaction.

Maintenance & Community

- The project has garnered over 20K stars and has active community channels including Telegram and WeChat groups.

- Milestones include the transition from Langchain-ChatGLM to Langchain-Chatchat and significant feature additions like Agent support.

Licensing & Compatibility

- Licensed under the Apache-2.0 license.

- Compatible with commercial use and closed-source linking.

Limitations & Caveats

- The 0.3.x architecture represents a significant change from previous versions, with a strong recommendation to redeploy rather than migrate.

- Users must manage the deployment and configuration of separate LLM inference frameworks.

3 months ago

Inactive

neuml

neuml mrdjohnson

mrdjohnson kghandour

kghandour zilliztech

zilliztech your-papa

your-papa Lakr233

Lakr233 bramses

bramses paulpierre

paulpierre szczyglis-dev

szczyglis-dev sugarforever

sugarforever AstrBotDevs

AstrBotDevs huggingface

huggingface