Discover and explore top open-source AI tools and projects—updated daily.

FunASR by  modelscope

modelscope

Speech recognition toolkit for bridging research and industrial applications

Top 3.3% on SourcePulse

FunASR is a comprehensive, end-to-end speech recognition toolkit designed for both academic research and industrial applications. It provides a unified platform for speech recognition (ASR), voice activity detection (VAD), punctuation restoration, and other speech processing tasks, enabling researchers and developers to build and deploy ASR systems efficiently.

How It Works

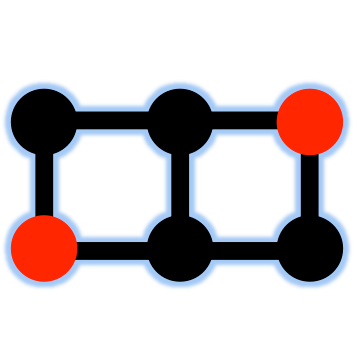

FunASR leverages a modular architecture, allowing users to combine various pre-trained models for different speech tasks. It supports both non-streaming and streaming inference, utilizing models like Paraformer (a parallel Transformer) for high accuracy and efficiency. The toolkit facilitates fine-tuning on custom datasets and offers robust deployment options, including real-time and file-based transcription services.

Quick Start & Requirements

- Install:

pip3 install -U funasr - Prerequisites: Python >= 3.8, PyTorch >= 1.13, torchaudio. Optional:

modelscope,huggingface_hub. - Resources: GPU recommended for optimal performance.

- Docs: Tutorial, Demo Examples

Highlighted Details

- Supports a wide range of speech tasks: ASR, VAD, Punctuation Restoration, Language Models, Speaker Verification, Speaker Diarization, Speech Emotion Recognition, and Keyword Spotting.

- Offers a vast Model Zoo with numerous pre-trained models on ModelScope and Hugging Face, including SenseVoiceSmall, Paraformer variants, Whisper-large-v3, and Qwen-Audio.

- Provides both non-streaming and streaming inference capabilities with configurable latency parameters.

- Includes services for offline file transcription (CPU/GPU) and real-time transcription.

Maintenance & Community

- Active development with frequent updates (e.g., new model support, service releases).

- Community support via GitHub Issues and a DingTalk group.

- Key contributors include researchers from Alibaba DAMO Academy.

Licensing & Compatibility

- License: MIT License for the toolkit. Pre-trained models are subject to their own Model License Agreement.

- Compatibility: Generally compatible with commercial use, but model-specific licenses should be reviewed.

Limitations & Caveats

- Some advanced features or specific model deployments might still be in progress or have limited documentation in English.

- Performance and resource requirements can vary significantly based on the chosen model and task.

3 weeks ago

1 week

egorsmkv

egorsmkv halsay

halsay AASHISHAG

AASHISHAG DmitryRyumin

DmitryRyumin jonatasgrosman

jonatasgrosman RapidAI

RapidAI athena-team

athena-team FunAudioLLM

FunAudioLLM k2-fsa

k2-fsa PaddlePaddle

PaddlePaddle espnet

espnet openai

openai