Discover and explore top open-source AI tools and projects—updated daily.

HierSpeechpp by  sh-lee-prml

sh-lee-prml

PyTorch for zero-shot TTS/voice conversion research

Top 31.3% on SourcePulse

HierSpeech++ is a PyTorch implementation for zero-shot speech synthesis and voice conversion, aiming to bridge the gap between semantic and acoustic representations. It offers a fast and robust alternative to LLM-based and diffusion-based models, targeting researchers and developers in speech technology.

How It Works

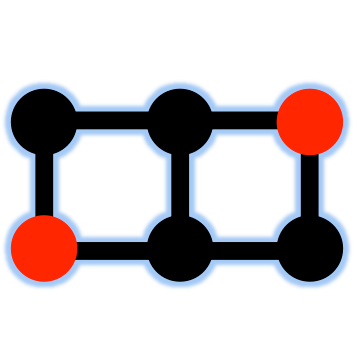

HierSpeech++ employs a hierarchical variational inference framework. For text-to-speech, it uses a text-to-vector (TTV) approach to generate speech representations and prosody prompts from text. The core Hierarchical Speech Synthesizer then generates speech from these vectors, augmented by a voice prompt. A high-efficiency speech super-resolution module upscales audio from 16 kHz to 48 kHz. This hierarchical structure is claimed to significantly improve robustness and expressiveness.

Quick Start & Requirements

- Install:

pip install -r requirements.txtandpip install phonemizer. Requiresespeak-ng(sudo apt-get install espeak-ng). - Prerequisites: PyTorch >= 1.13, torchaudio >= 0.13.

- Checkpoints: Pre-trained models for Hierarchical Speech Synthesizer and TTV are available for download.

- Demo: A Gradio demo is available on HuggingFace.

- Inference: Run

inference.shorinference_vc.shwith specified checkpoints.

Highlighted Details

- Achieves human-level quality in zero-shot speech synthesis.

- Outperforms LLM-based and diffusion-based models in experiments.

- Supports both Text-to-Speech (TTS) and Voice Conversion (VC).

- Includes a 16 kHz to 48 kHz speech super-resolution framework.

Maintenance & Community

The project is an extension of previous works like HierSpeech and HierVST. Updates are periodically released, with ongoing work on TTV-v2 and code cleanup. Links to previous works and baseline models are provided.

Licensing & Compatibility

The repository is released under the MIT License, allowing for commercial use and integration with closed-source projects.

Limitations & Caveats

The project notes slow training speed and a relatively large model size compared to simpler models like VITS. It cannot currently generate realistic background sounds and may struggle with very long sentences due to training settings. The denoiser component may cause out-of-memory issues with long reference audio.

2 years ago

Inactive

PolyAI-LDN

PolyAI-LDN SpeechifyInc

SpeechifyInc OpenMOSS

OpenMOSS Rongjiehuang

Rongjiehuang keonlee9420

keonlee9420 VITA-MLLM

VITA-MLLM FireRedTeam

FireRedTeam boson-ai

boson-ai yl4579

yl4579 SparkAudio

SparkAudio espnet

espnet RVC-Boss

RVC-Boss