Discover and explore top open-source AI tools and projects—updated daily.

ProDiff by  Rongjiehuang

Rongjiehuang

PyTorch implementation for fast diffusion text-to-speech

Top 68.8% on SourcePulse

ProDiff offers an extremely fast, high-fidelity text-to-speech (TTS) pipeline for industrial deployment, leveraging conditional diffusion probabilistic models. It targets researchers and developers seeking efficient and high-quality speech synthesis solutions.

How It Works

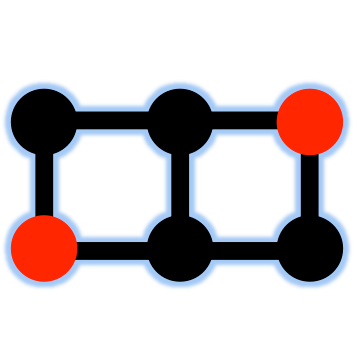

ProDiff utilizes a two-stage approach: ProDiff (acoustic model) and FastDiff (neural vocoder). This combination allows for progressive diffusion, enabling rapid synthesis by controlling the number of reverse sampling steps in both models. This design prioritizes speed without significantly compromising speech quality, making it suitable for real-time or near-real-time applications.

Quick Start & Requirements

- Install/Run: Clone the repository. Download checkpoints using

snapshot_downloadfrom Hugging Face Hub and move them to thecheckpoints/directory. - Prerequisites: NVIDIA GPU with CUDA and cuDNN, PyTorch, librosa.

- Setup: Requires downloading pre-trained models. Inference setup is straightforward following the provided commands.

- Links: Demo Page, FastDiff

Highlighted Details

- Extremely-fast diffusion TTS pipeline.

- PyTorch implementation of ProDiff (ACM-MM'22) and FastDiff (IJCAI 2022).

- Supports speed-quality trade-offs via adjustable sampling iterations.

- Provides pre-trained models for LJSpeech.

Maintenance & Community

- The project is associated with authors from multiple institutions, indicating potential academic backing.

- Links to related projects like FastDiff and NATSpeech are provided.

Licensing & Compatibility

- The repository does not explicitly state a license. However, the disclaimer prohibits using the technology to generate speech without consent, which may imply usage restrictions beyond typical open-source licenses.

Limitations & Caveats

- The primary pre-trained model is for LJSpeech; support for more datasets is pending.

- The disclaimer regarding consent for speech generation suggests potential legal or ethical considerations for commercial use.

2 years ago

Inactive

inclusionAI

inclusionAI SpeechifyInc

SpeechifyInc yl4579

yl4579 keonlee9420

keonlee9420 lucidrains

lucidrains sh-lee-prml

sh-lee-prml spring-media

spring-media bytedance

bytedance jaywalnut310

jaywalnut310 espnet

espnet