Discover and explore top open-source AI tools and projects—updated daily.

retrieval-backend-with-rag by  bangoc123

bangoc123

Vietnamese RAG evaluation framework for product chatbots

Top 98.1% on SourcePulse

This project provides a RAG (Retrieval-Augmented Generation) backend framework with a focus on best practices for Vietnamese language applications. It offers a comprehensive evaluation suite for RAG components, including retrieval, re-ranking, and LLM answer quality, aiming to ensure grounded and accurate responses. The framework is designed for developers building sophisticated chatbots and Q&A systems that leverage proprietary data in Vietnamese.

How It Works

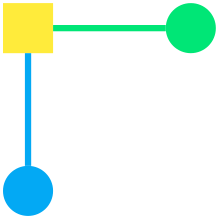

The core architecture facilitates product data retrieval and question answering using a RAG pipeline. It integrates with various LLM providers (OpenAI, Gemini, Together AI, Ollama, vLLM, HuggingFace, ONNX) and vector databases (MongoDB, Qdrant). A Semantic Router is employed to handle casual conversations, enhancing the chatbot's conversational capabilities beyond direct data retrieval. The system emphasizes groundedness by measuring how well responses are supported by provided context, thereby reducing hallucinations.

Quick Start & Requirements

- Installation: Requires Python >= 3.12. Install dependencies via

pip install -r requirements.txt. - Prerequisites: Environment variables must be configured for chosen LLM providers (Gemini, OpenAI, Together AI, Ollama, vLLM) and vector databases (MongoDB, Qdrant). MongoDB Atlas vector search index creation is necessary.

- Running the Server: Supports multiple modes:

online(with OpenAI/Gemini) andoffline(with Ollama, HuggingFace, ONNX). Example commands provided for different LLM configurations. - Client: An open-source chatbot client is available via Docker (

docker pull protonx/protonx-open-source:protonx-chat-client-v01). - Links: Demo slides and video are available. GitHub for the UI client:

protonx-ai-app-UI.

Highlighted Details

- Comprehensive evaluation framework covering Retrieval, ReRank (nCDG), LLM Answer (BLEU, ROUGE), and Groundedness benchmarks.

- Supports a wide array of LLM backends including local options via Ollama, vLLM, HuggingFace, and ONNX.

- Integrated vector database support for MongoDB and Qdrant, with specific instructions for MongoDB Atlas.

- Open-source Dockerized client simplifies deployment and testing of the RAG backend.

Maintenance & Community

Information regarding maintainers, community channels (like Discord/Slack), or a public roadmap is not detailed in the provided README.

Licensing & Compatibility

The specific open-source license and any compatibility notes for commercial use or closed-source linking are not explicitly stated in the provided README content.

Limitations & Caveats

The guide for setting up Qdrant as a vector database is noted as "will be updated soon." The primary focus on Vietnamese language best practices may require adaptation for other linguistic contexts.

4 months ago

Inactive

yacy

yacy neuml

neuml zilliztech

zilliztech Azure-Samples

Azure-Samples shibing624

shibing624 ThomasVitale

ThomasVitale paulpierre

paulpierre alfredfrancis

alfredfrancis weaviate

weaviate a16z-infra

a16z-infra Cinnamon

Cinnamon chatchat-space

chatchat-space