Discover and explore top open-source AI tools and projects—updated daily.

riffusion-app-hobby by  riffusion

riffusion

Web app for real-time music generation using stable diffusion

Top 17.3% on SourcePulse

Riffusion App provides a web interface for real-time music generation using Stable Diffusion models. It targets musicians, artists, and developers interested in AI-powered audio creation, offering a user-friendly platform for exploring and generating novel musical pieces.

How It Works

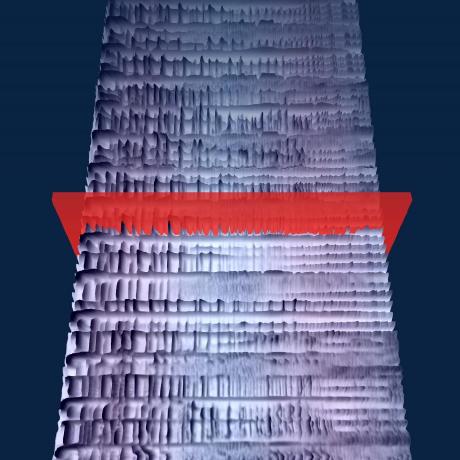

The application leverages Stable Diffusion, a latent diffusion model, to generate audio spectrograms from text prompts. These spectrograms are then converted into audible music. The architecture is built with Next.js, React, and TypeScript for the frontend, utilizing three.js for 3D visualizations and Tailwind CSS for styling, deployed on Vercel.

Quick Start & Requirements

- Install packages:

npm installoryarn install - Run development server:

npm run devoryarn dev - Requires Node.js v18 or greater.

- An inference server (Flask app) is needed for actual model output generation, requiring a GPU capable of running Stable Diffusion quickly. The inference server URL must be specified in a

.env.localfile.

Highlighted Details

- Real-time music generation via text prompts.

- Web application built with modern frontend technologies (Next.js, React, TypeScript).

- Utilizes Stable Diffusion for audio synthesis.

Maintenance & Community

This project is no longer actively maintained.

Licensing & Compatibility

The license is not explicitly stated in the README.

Limitations & Caveats

The project is explicitly marked as "no longer actively maintained," which may indicate a lack of future updates, bug fixes, or community support. Running the full functionality requires a separate, GPU-intensive inference server.

1 year ago

Inactive

shansongliu

shansongliu stevenwaterman

stevenwaterman spotify-research

spotify-research AMAAI-Lab

AMAAI-Lab Audio-AGI

Audio-AGI fspecii

fspecii FunAudioLLM

FunAudioLLM gabotechs

gabotechs tencent-ailab

tencent-ailab goxr3plus

goxr3plus rsxdalv

rsxdalv