Discover and explore top open-source AI tools and projects—updated daily.

riffusion-hobby by  riffusion

riffusion

Library for real-time music/audio generation using stable diffusion

Top 12.4% on SourcePulse

Riffusion (hobby) is a library for real-time music and audio generation using Stable Diffusion, targeting musicians, sound designers, and researchers. It enables the creation of audio from text prompts and spectrogram images, offering a novel approach to generative audio.

How It Works

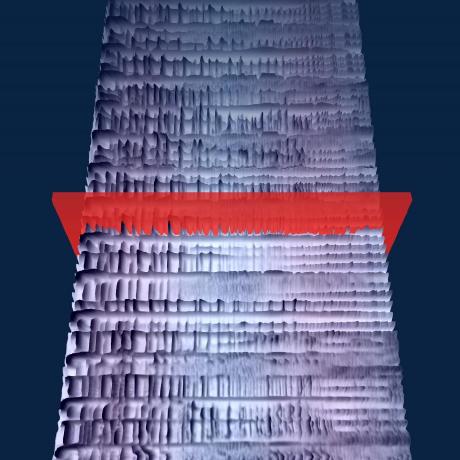

Riffusion leverages Stable Diffusion models to generate audio by treating spectrograms as images. It employs prompt interpolation and image conditioning within the diffusion pipeline. This method allows for seamless transitions between musical styles or sounds by interpolating between different text prompts or conditioning on existing spectrograms, offering a unique way to explore and generate audio.

Quick Start & Requirements

- Install dependencies:

python -m pip install -r requirements.txt - Requires Python 3.9 or 3.10.

ffmpegis required for audio formats other than WAV.- CUDA-enabled GPU (e.g., 3090, A10G) recommended for real-time performance. MPS backend supported on Apple Silicon with potential fallbacks.

- Official documentation: https://www.riffusion.com/about

Highlighted Details

- Real-time music and audio generation via Stable Diffusion.

- Converts between spectrogram images and audio clips.

- Includes a command-line interface and an interactive Streamlit app.

- Provides a Flask server for API-based model inference.

Maintenance & Community

This project is no longer actively maintained.

Licensing & Compatibility

The repository does not explicitly state a license. The associated website and model checkpoints may have different licensing terms.

Limitations & Caveats

The project is explicitly marked as "no longer actively maintained." While CPU is supported, it is noted as "quite slow" for real-time generation. MPS backend on Apple Silicon has potential fallbacks to CPU for audio processing and is not deterministic.

1 year ago

Inactive

scottvr

scottvr JavisVerse

JavisVerse LiuZH-19

LiuZH-19 Text-to-Audio

Text-to-Audio declare-lab

declare-lab FunAudioLLM

FunAudioLLM haoheliu

haoheliu lucidrains

lucidrains jixiaozhong

jixiaozhong facebookresearch

facebookresearch