Discover and explore top open-source AI tools and projects—updated daily.

Make-An-Audio by  Text-to-Audio

Text-to-Audio

Text-to-audio generation with diffusion models

Top 50.5% on SourcePulse

Make-An-Audio provides a PyTorch implementation of a text-to-audio generative model based on conditional diffusion probabilistic models. It allows users to generate high-fidelity audio from text prompts, targeting researchers and developers in the audio generation space. The project offers pre-trained models and a clear implementation, enabling efficient and high-quality audio synthesis.

How It Works

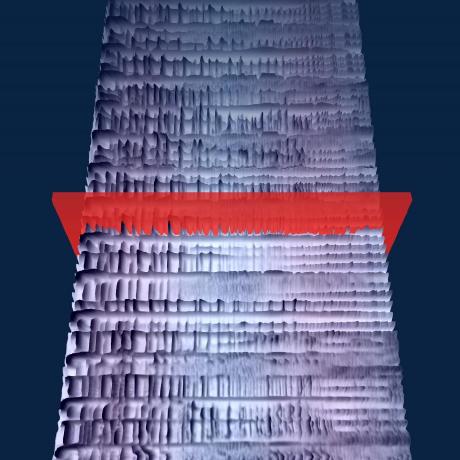

The model utilizes a prompt-enhanced diffusion approach, specifically a conditional diffusion probabilistic model. This method allows for the generation of high-fidelity audio efficiently by conditioning the diffusion process on text prompts. The architecture likely involves a diffusion model that learns to denoise data, guided by text embeddings, potentially using a VAE for latent space manipulation and a vocoder (like BigVGAN) for waveform synthesis.

Quick Start & Requirements

- Install/Run: Clone the repository and use provided Python scripts for inference and training.

- Prerequisites: NVIDIA GPU with CUDA and cuDNN, Python. Specific checkpoints (

maa1_full.ckpt, BigVGAN vocoder, CLAP weights) need to be downloaded and placed in./useful_ckpts. - Setup: Requires downloading several large checkpoint files. Inference command example:

python gen_wav.py --prompt "a bird chirps" --ddim_steps 100 --duration 10 --scale 3 --n_samples 1 --save_name "results". - Links: HuggingFace Spaces for demos are available.

Highlighted Details

- Implements Make-An-Audio (ICML'23), a text-to-audio diffusion model.

- Supports audio inpainting via a separate HuggingFace Space.

- Provides scripts for dataset preprocessing, VAE training, diffusion model training, and various audio quality evaluations (FD, FAD, IS, KL, CLAP score).

- Includes an Audio2Audio script for audio style transfer or modification.

Maintenance & Community

The project is associated with ICML'23 and has an arXiv preprint. It references code from CLAP and Stable Diffusion repositories. No specific community links (Discord, Slack) or active maintenance signals are provided in the README.

Licensing & Compatibility

The README does not explicitly state a license. However, the disclaimer warns against using the technology to generate speech without consent, implying potential legal and ethical considerations. Compatibility for commercial use or closed-source linking is not specified.

Limitations & Caveats

Dataset download links are not provided due to copyright issues, requiring users to source their own audio data. The disclaimer strongly advises against unauthorized speech generation, highlighting ethical and legal risks. The project's reliance on specific checkpoint files and a potentially complex training pipeline may present adoption challenges.

1 year ago

Inactive

yangdongchao

yangdongchao Rongjiehuang

Rongjiehuang rishikksh20

rishikksh20 lucidrains

lucidrains declare-lab

declare-lab haoheliu

haoheliu riffusion

riffusion huggingface

huggingface