Discover and explore top open-source AI tools and projects—updated daily.

metal-flash-attention by  philipturner

philipturner

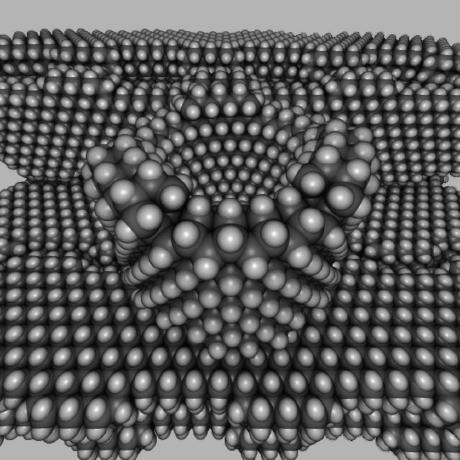

Metal port of FlashAttention for Apple silicon

Top 55.4% on SourcePulse

This repository provides a Metal port of FlashAttention, optimized for Apple Silicon. It targets researchers and developers working with large language models on Apple hardware, offering a performant and memory-efficient implementation of the attention mechanism.

How It Works

The port focuses on single-headed attention, meticulously optimizing for Metal's architecture. It addresses register pressure bottlenecks through novel blocking strategies and intentional register spilling, achieving high ALU utilization. The backward pass is redesigned with higher compute cost but improved parallelization, avoiding problematic FP32 atomics emulation on Apple hardware.

Quick Start & Requirements

- Install via Swift Package Manager or by cloning the repository.

- Requires macOS and Xcode.

- Compile with

-Xswiftc -Ouncheckedfor performance. - See Usage for detailed setup.

Highlighted Details

- Achieves 4400 gigainstructions per second on M1 Max.

- Backward pass uses less memory than the official implementation.

- Novel backward pass design with 100% parallelization efficiency.

- Optimized register spilling for large head dimensions.

Maintenance & Community

- Maintained by philipturner.

- No explicit community links (Discord/Slack) are provided in the README.

Licensing & Compatibility

- The README does not explicitly state a license.

Limitations & Caveats

- Currently supports only single-headed attention.

- BF16 emulation is used, which may incur overhead on older chips.

- Benchmarks suggest potential issues with large head dimensions (D=256) on some Nvidia hardware, though this port aims to address such limitations on Apple Silicon.

1 year ago

Inactive

xlite-dev

xlite-dev dipampaul17

dipampaul17 antgroup

antgroup efeslab

efeslab vllm-project

vllm-project RightNow-AI

RightNow-AI StanfordPL

StanfordPL NU-QRG

NU-QRG sgl-project

sgl-project gpu-mode

gpu-mode tracel-ai

tracel-ai Dao-AILab

Dao-AILab